TensorFlow: Tabular Regression

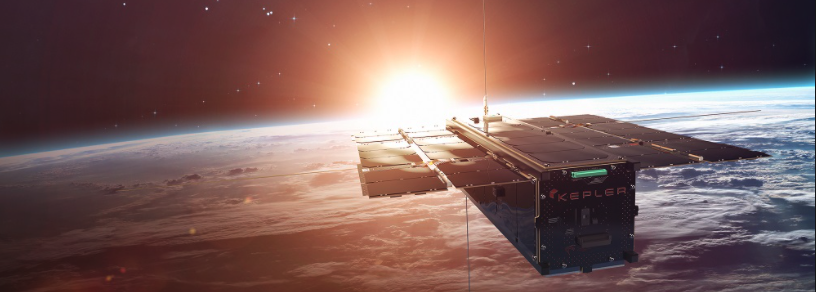

Predicting Exoplanet Surface Temperature Using Kepler Satellite Sensor Data.

💾 Data

Reference Example Datasets for more information.

This dataset is comprised of:

Features = characteristics of the planet in the context of its solar system.

Label = the temperature of the planet.

[3]:

from aiqc import datum

df = datum.to_pandas('exoplanets.parquet')

df.sample(5)

[3]:

| TypeFlag | PlanetaryMassJpt | PeriodDays | SurfaceTempK | DistFromSunParsec | HostStarMassSlrMass | HostStarRadiusSlrRad | HostStarMetallicity | HostStarTempK | |

|---|---|---|---|---|---|---|---|---|---|

| 5 | 0 | 0.2500 | 19.224180 | 707.2 | 650.00 | 1.070 | 1.0200 | 0.12 | 5777.0 |

| 6 | 0 | 0.1700 | 39.031060 | 557.9 | 650.00 | 1.070 | 1.0200 | 0.12 | 5777.0 |

| 7 | 0 | 0.0220 | 1.592851 | 1601.5 | 650.00 | 1.070 | 1.0200 | 0.12 | 5777.0 |

| 15 | 0 | 1.2400 | 2.705782 | 2190.0 | 200.00 | 1.630 | 2.1800 | 0.12 | 6490.0 |

| 16 | 0 | 0.0195 | 1.580404 | 604.0 | 14.55 | 0.176 | 0.2213 | 0.10 | 3250.0 |

[2]:

from aiqc.orm import Dataset

shared_dataset = Dataset.Tabular.from_df(df)

🚰 Pipeline

Reference High-Level API Docs for more information.

[4]:

from aiqc.mlops import Pipeline, Input, Target, Stratifier

from sklearn.preprocessing import StandardScaler, RobustScaler, OneHotEncoder

[5]:

pipeline = Pipeline(

Input(

dataset = shared_dataset,

encoders = [

Input.Encoder(

RobustScaler(),

dtypes = ['float64']

),

Input.Encoder(

OneHotEncoder(),

dtypes = ['int64']

)

]

),

Target(

dataset = shared_dataset

, column = 'SurfaceTempK'

, encoder = Target.Encoder(StandardScaler())

),

Stratifier(

size_test = 0.12

, size_validation = 0.22

, fold_count = None

, bin_count = 4

)

)

└── Info - System overriding user input to set `sklearn_preprocess.copy=False`.

This saves memory when concatenating the output of many encoders.

└── Info - System overriding user input to set `sklearn_preprocess.copy=False`.

This saves memory when concatenating the output of many encoders.

└── Info - System overriding user input to set `sklearn_preprocess.sparse=False`.

This would have generated 'scipy.sparse.csr.csr_matrix', causing Keras training to fail.

🧪 Experiment

Reference High-Level API Docs for more information.

[6]:

from aiqc.mlops import Experiment, Architecture, Trainer

import tensorflow as tf

from tensorflow.keras import layers as l

from aiqc.utils.tensorflow import TrainingCallback

[7]:

def fn_build(features_shape, label_shape, **hp):

m = tf.keras.models.Sequential()

m.add(l.Input(shape=features_shape))

# Example of using hyperparameters to tweak topology.

# with 'block' for each layer.

for block in range(hp['blocks']):

# Example of using hyperparameters to tweak topology.

m.add(l.Dense(hp['neuron_count']))

# Example of using hyperparameters to tweak topology.

# BatchNorm, Activation, Dropout (B.A.D.)

if (hp['batch_norm'] == True):

m.add(l.BatchNormalization())

m.add(l.Activation('relu'))

m.add(l.Dropout(0.2))

m.add(l.Dense(label_shape[0]))

return m

[8]:

def fn_train(

model, loser, optimizer,

train_features, train_label,

eval_features, eval_label,

**hp

):

model.compile(

loss = loser

, optimizer = optimizer

, metrics = ['mean_squared_error']

)

metrics_cuttoffs = [

dict(metric='val_loss', cutoff=0.025, above_or_below='below'),

dict(metric='loss', cutoff=0.025, above_or_below='below'),

]

cutoffs = TrainingCallback.MetricCutoff(metrics_cuttoffs)

model.fit(

train_features, train_label

, validation_data = (eval_features, eval_label)

, verbose = 0

, batch_size = hp['batch_size']

, callbacks = [tf.keras.callbacks.History(), cutoffs]

, epochs = hp['epoch_count']

)

return model

[9]:

hyperparameters = dict(

batch_size = [3]

, blocks = [2]

, batch_norm = [True, False]

, epoch_count = [75]

, neuron_count = [24, 36]

, learning_rate = [0.01]

)

[10]:

experiment = Experiment(

Architecture(

library = "keras"

, analysis_type = "regression"

, fn_build = fn_build

, fn_train = fn_train

, hyperparameters = hyperparameters

),

Trainer(

pipeline = pipeline

, repeat_count = 2

)

)

[12]:

experiment.run_jobs()

📦 Caching Splits 📦: 100%|██████████████████████████████████████████| 3/3 [00:00<00:00, 382.83it/s]

🔮 Training Models 🔮: 100%|██████████████████████████████████████████| 8/8 [01:39<00:00, 12.43s/it]

📊 Visualization & Interpretation

For more information on visualization of performance metrics, reference the Dashboard documentation.